WATSON SERVICES FOR CORE ML

Proof-of-Concept to Platform: Pioneering Enterprise Mobile AI

I designed the flagship proof-of-concept for Apple + IBM's Watson Services for Core ML that validated on-device AI for field service, launched at Think 2018, and evolved into IBM Inspector Portable—a product still in market today.

PRODUCT IBM Watson Services for Core ML

TEAM UX Lead, Senior UX Designer (Myself), Business Analyst, Architect, Tech Lead, Developers, Project Manager

CLIENT Coca-Cola (Flagship Pilot Customer)

PLATFORM IMPACT IBM Inspector Portable (Current IBM product)

ROLE Senior UX Designer

YEAR 2017 - 2019

The Opportunity

Prove that on-device machine learning could work reliably in high-stakes field service environments.

IBM needed a flagship proof-of-concept for Watson Services for Core ML—a new offering that would combine Apple's on-device machine learning framework with IBM's enterprise AI capabilities. I joined the team to design this proof-of-concept with Coca-Cola as the strategic pilot customer.

The Business Context

190 billion servings of Coca-Cola fountain beverages are served in over 200 countries every day.

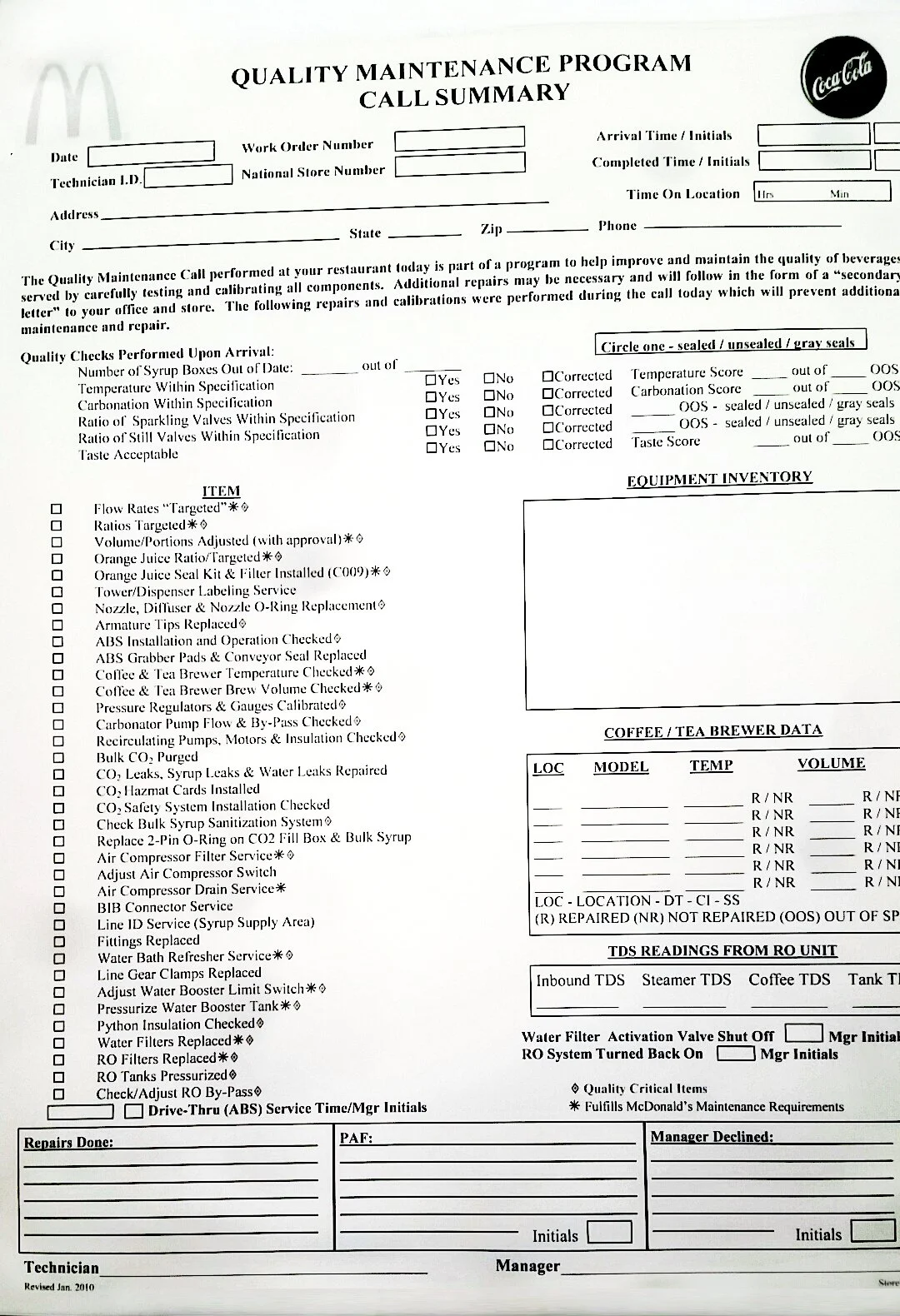

Thousands of field technicians conduct quality assurance inspections on vending machines and fountain equipment. Their current process was inefficient and inconsistent, relying on paper checklists and manual data entry. Coke approached the IBM+Apple team to explore how emerging technologies could transform their field operations.

Five Strategic Phases

Five Strategic Phases

The work evolved through five distinct phases, each building on insights from the previous:

PHASE 1

Pluto

Before Coca-Cola, I worked on "Pluto"—an early proof-of-concept for printer technicians that established the core technical architecture.

This is where I made fundamental design decisions about how conversational AI, visual recognition, and augmented reality could work together in a single field service application.

KEY DESIGN DECISIONS

Conversational flow that felt natural, not robotic

Visual recognition that worked in varied lighting conditions

AR overlays that guided repair without overwhelming the interface

Offline functionality (critical for field environments with spotty connectivity)

Pluto proved the technology could work. Next we needed to prove it solved real business problems.

Apple Design Lab with Coca-Cola

PHASE 2

With the technical foundation validated, I partnered with our UX Lead to run a 3-day intensive Design Lab at Apple's headquarters with Coca-Cola stakeholders and three field technicians.

Day 1

Understanding the Current State

We interviewed field technicians and documented their workflows on whiteboards. Four critical questions guided our research:

What tasks technicians responsible for completing?

What tools or resources currently help them?

What are their pain points?

What parts of their job shouldn’t change?

Key Insights

Technicians spent excessive time searching for information across multiple systems

Paper-based inspections were redundant and error-prone

Each store visit required manual documentation, consuming time that could be spent on actual repairs

Experienced technicians had tacit knowledge that wasn't captured or shared

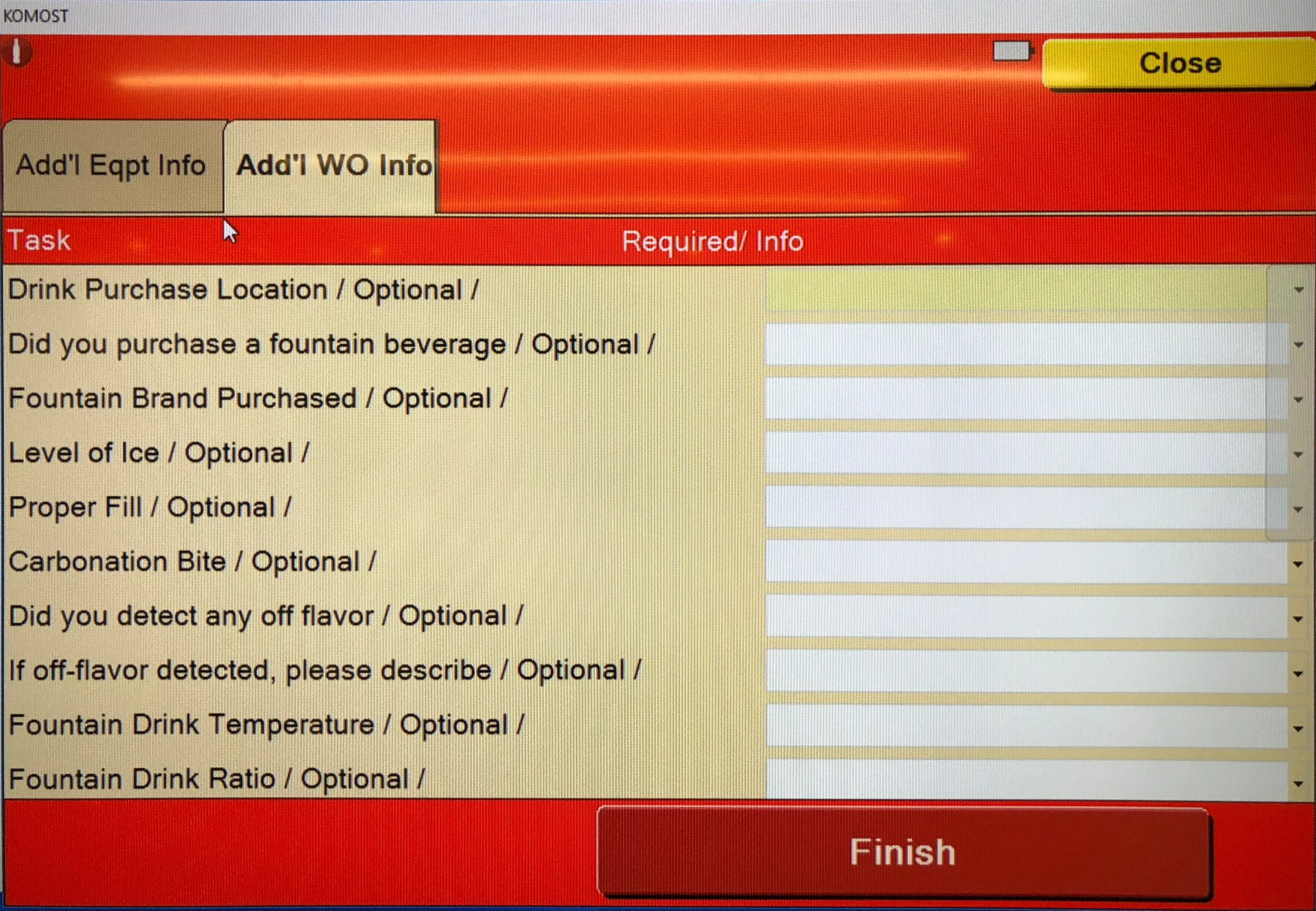

Regional equipment variations meant standardized checklists didn't always apply

Day 2

Envisioning the Future State

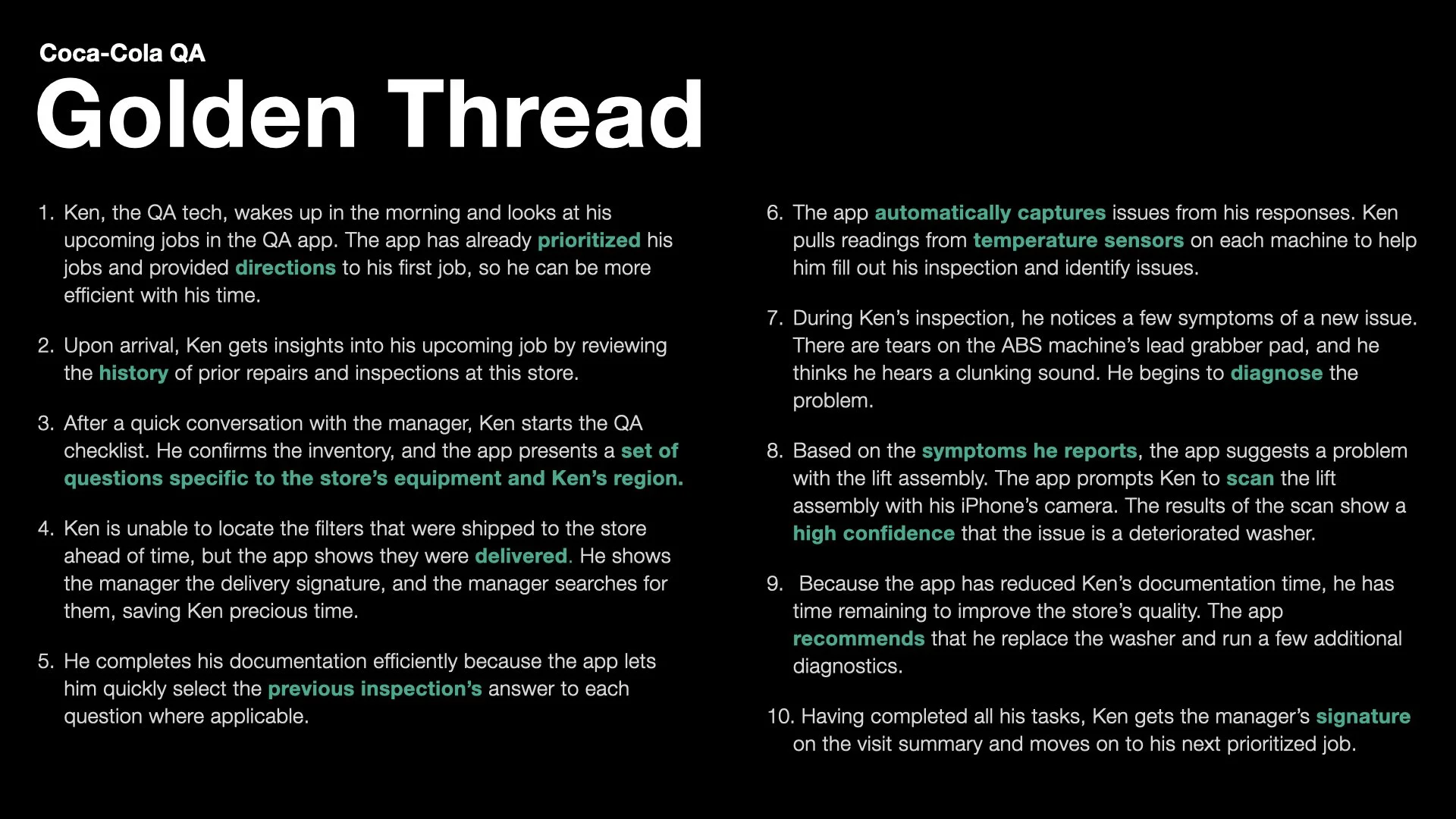

I drafted a "Golden Thread"—a step-by-step narrative of how the app would transform a technician's workday. After aligning stakeholders on this vision, we moved to rapid whiteboarding, iterating on flows and interfaces in real-time with technician feedback.

Day 3

Using validated sketches from Day 2, I created high-fidelity prototypes, establishing the core flow and visual design. We tested these with technicians to validate the approach before moving to development.

From Sketch to High Fidelity

EXPANDING THE BRIEF

During this session, I also presented concepts for Apple Watch integration that would enable visual access to the inside of machines during complex repairs. This wasn't in the original brief, but it solved a real problem technicians described—needing both hands free while accessing information. This expanded thinking helped win Coca-Cola's commitment to the pilot.

PHASE 3

Agile Development & AI Training

Following the Design Lab, we moved into agile sprint development to build the working application and translate validated concepts into a production-ready system.

Building the AI Foundation

I partnered with our technical team to train Watson's visual recognition using real instruction manuals and equipment documentation. We trained the system to identify specific components, recognize failure states, and account for contextual variations across equipment models and environments.

Designing the Complete Inspection Workflow

The Design Lab established the core flow. Real inspections required far more detail. I designed the full QA inspection workflow with precise data capture points, validation rules, and error handling—every inspection step needed specific data fields, conditional logic based on equipment type, and fail-safes for edge cases.

Agile Iteration

Through weekly sprints, we refined the interface as AI capabilities matured, adjusting UX based on model accuracy, response times, and on-device processing constraints. Each sprint surfaced new design challenges: communicating AI confidence levels without undermining user trust, handling graceful degradation when visual recognition failed, and balancing automation with user control.

PHASE 4

Ride-Alongs with Real Technicians

A few weeks after the Design Lab, we conducted field research with two experienced QA technicians at McDonald's locations and the developed app, observing actual machine inspections. This revealed critical gaps between our Design Lab assumptions and field reality.

Field Insights

What Worked

The streamlined inspection flow worked well and saved significant time

What Didn’t

The "Repair with AR" feature was impressive but impractical—experienced technicians didn't need it, and novices wouldn't trust it for complex repairs

What Mattered Most

Technicians valued quick access to previous inspection data and service history more than any AI feature

REFINEMENT CONSIDERATIONS

The "Repair with AR" feature I designed functioned well—the video shows it successfully guiding repairs on a Reverse Osmosis Machine. We knew experienced technicians wouldn’t necessarily need them; it would be more helpful for new technicians.

PHASE 5

To demonstrate the platform's versatility, we also trained the system on Arduino Uno components—showing how the same framework could adapt across industries with different training data.

Think 2018 & Platform Expansion

After the field studies, we refined the prototypes for IBM's Think 2018 conference, where Watson Services for Core ML launched publicly.

The Coca-Cola work was showcased as the flagship example of on-device AI transforming enterprise field service.

The success proved the approach and opened new opportunities. We developed a white-label version that could be customized across industries and use cases, establishing a repeatable framework for AI-powered field service applications. What began as a proof-of-concept for beverage quality assurance became a platform strategy.

The Solution

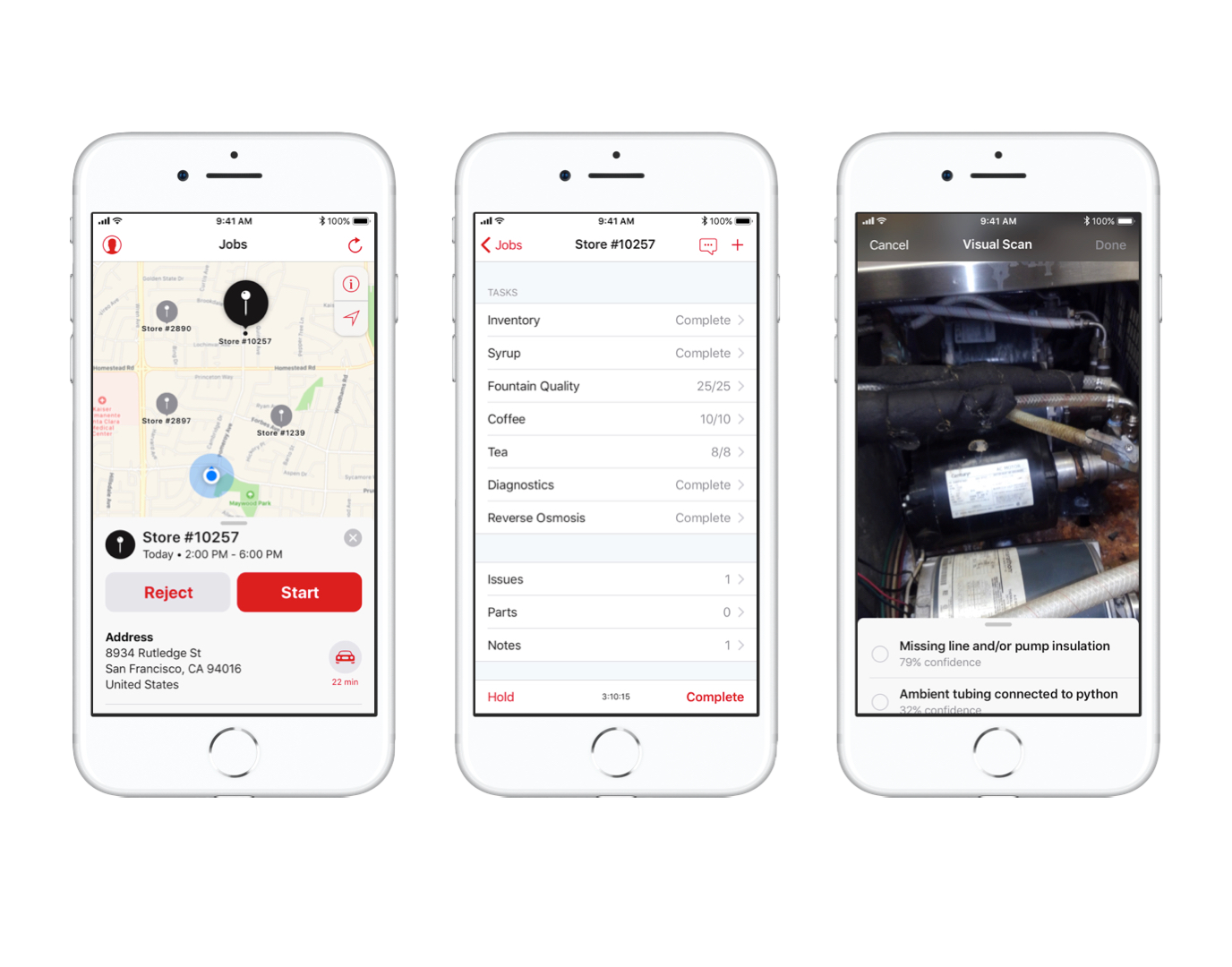

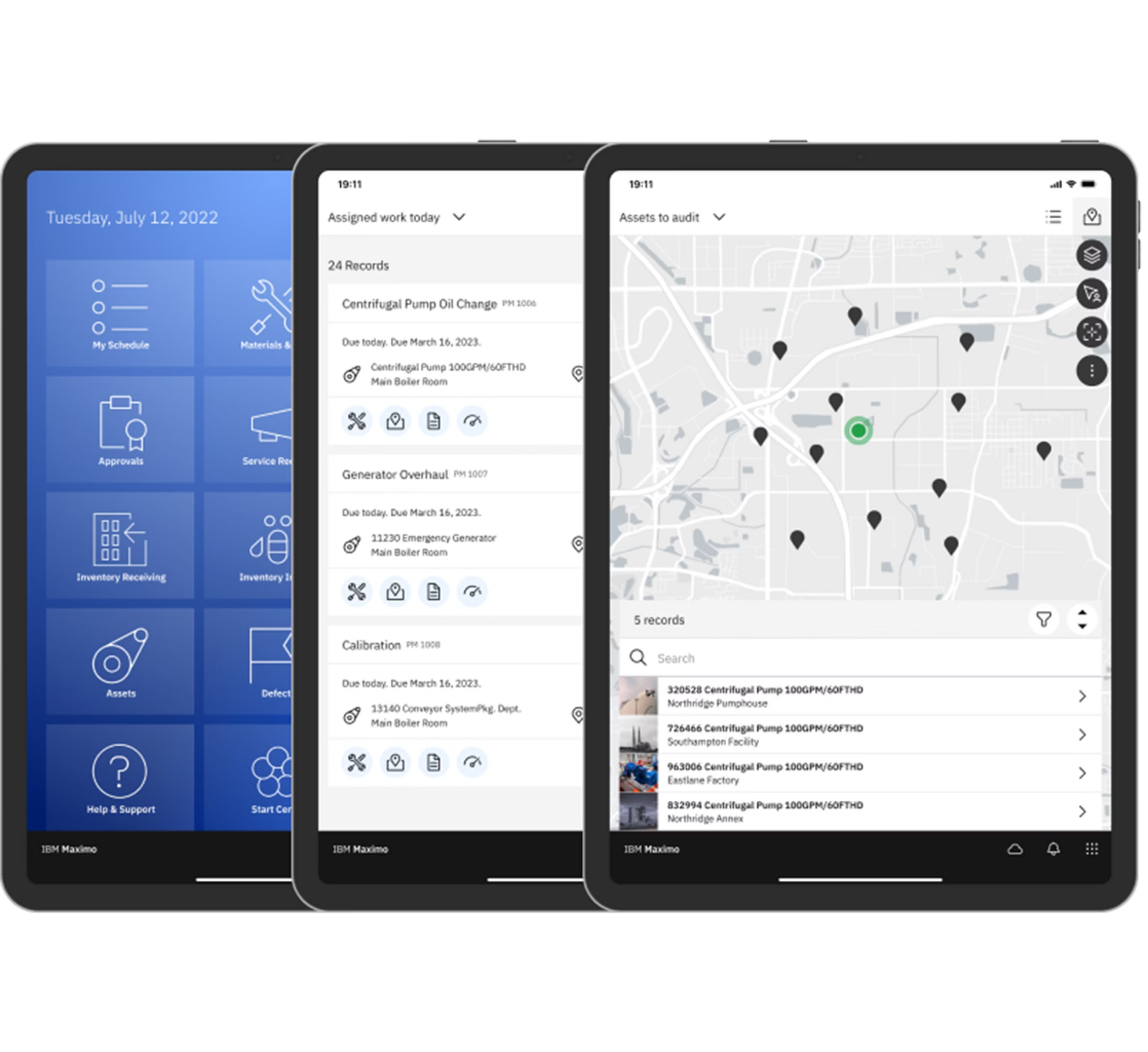

The app combines machine learning (Apple's Core ML), AI for visual recognition (IBM Watson), and augmented reality (AR).

Locate

Technicians can locate jobs, view service history, and access relevant store information in real time.

Inspect

A streamlined inspection flow lets technicians track issues, parts, and data throughout each phase of the job.

Identify

Watson visual recognition provides immediate on-device results, identifying equipment problems or parts without connectivity delays.

Diagnose

Machine learning helps technicians diagnose equipment issues faster by analyzing symptoms and suggesting likely causes.

Repair

Augmented reality overlays provide step-by-step repair guidance directly on the equipment, eliminating the need to search through manuals.

Chat

A conversational AI assistant provides contextual help and answers questions about equipment, parts, and procedures.

Learn

The system improves over time by collecting user-submitted photos and feedback, expanding its visual recognition accuracy and diagnostic capabilities.

Outcomes

Product Launch & Recognition

Officially integrated into IBM's Watson Services offerings from 2018 to 2022.

Featured on Apple's developer site as an exemplar of Core ML capabilities

Won IBM's Outstanding Technical Achievement Award (the highest internal technical honor)

Generated close to 1 billion media impressions across Fortune, Forbes, TechCrunch, Apple Insider, ZDNet, Computerworld, and 8+ other major tech publications

MEDIA REACH

1B

impressions

Platform Evolution

The success of this proof of concept validated the approach and led to an entire product line and platform offering for asset inspection called IBM Maximo. The design patterns and technical architecture I established evolved into IBM Inspector Portable—a product currently on the market that enables handheld visual inspections on iPhone and iPads.